It feels like everyone is jumping on the Digital Twin bandwagon these days. But how do we get them to play nice together and stop everyone from reinventing the wheel? Geonovum is tackling this challenge by developing an architecture for Digital Twins that makes it easier to create reusable components. This architecture is built around what’s known as the ‘triangle model’.

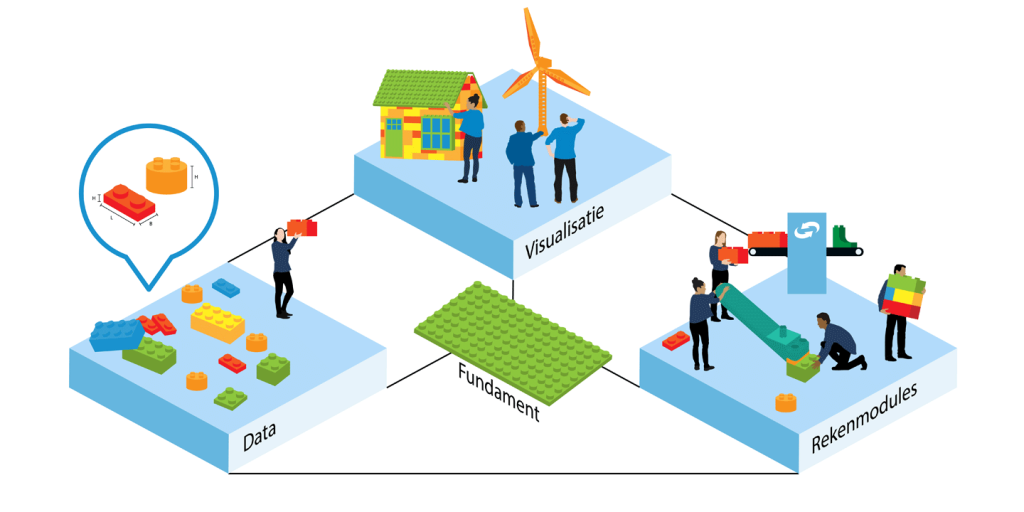

The triangle model

The triangle model consists of three components: the data, the computational model and the visualization.

For instance, the Urbo3D Climate Game (a digital twin of a neighborhood) consists of three parts:

- Data: the 3D data from the ‘3D basisvoorziening‘ van de the Kadaster, combined with BAG, BGT and climate data from the Klimaateffectatlas.

- Computational model: Models that calculate the Urban Heat Island effect and the flooding effects after changes in the game.

- Visualization: the Godot game engine that visualizes the 3D data and the results of the computational models.

The triangle allows independent development and reuse of components across digital twins. For example, if higher detail is needed for solar panel placement, the ‘3D Basisvoorziening’ can be swapped with ‘3D BAG‘ data. Similarly, more accurate flooding models can replace existing ones.

Components can reside on different servers and be accessed via APIs. However, while data is available through endpoints on PDOK, computational models are not yet API-accessible. Geonovum is exploring ways to make these models interoperable.

So far the theory. The practice is somewhat more messy.

Challenges: The Law of Leaky Abstractions

The law of leaky abstractions is a term coined by Joel Spolsky in his blog post The Law of Leaky Abstractions. The law of leaky abstractions states that every abstraction has a leak, i.e. some details that cannot be abstracted away, which causes problems.

The hairy, leaking detail in this case is the performance of the system. The computational models need data to operate on, so the model and the data need to be brought together: either the (often large and complex) data need to be shipped to the model or the computation needs to be shipped to the data. Both options have their own problems.

- Data shipping: Large, complex 3D data can cause performance issues and synchronization challenges in dynamic environments.

- Computation shipping: Complex models are difficult to execute elsewhere, and data changes remain problematic.

In practice, the 3D data is often shipped to the model, which can cause performance problems. These problems can be mitigated by using techniques like data compression, sampling, and caching. However, these techniques are not trivial to implement and would require the computational model APIs to be more complex.

Performance and usability

Performance is critical for usability, especially in educational games like Urbo3D, where a responsive interface is essential for the game to capture the attention of teenagers. But also in a professional setting, a slow system can be a major problem. Slow systems frustrate users, hindering exploration and experimentation.

In the worst case, slow performance will lead users to abandon their digital twins entirely.

Solving Performance Issues Urbo3D

To achieve performance that is acceptable for our picky teenage users, we used a number of strategies:

- We broke the abstractions

- We reduced the number of computations

- We pushed the computations to the GPU

Breaking the abstractions

The computational models are directly integrated into the Godot visualization engine. This approach eliminates the roundtrip latency between the client and server, offering players the immediate feedback they require. However, it necessitates developing the models in-house rather than utilizing third-party APIs. Consequently, any model replacements will also need to be custom-implemented.

Reducing the number of computations

To minimize computational load, we employ a strategy known as ‘incremental computing’. This approach enables us to update the system’s state more efficiently by avoiding full recalculations.

- Initially, the system’s state is computed using the original model.

- Upon any modification within the digital twin, we selectively update the state only in the geographical area impacted by the change.

For example, when evaluating heat stress in a neighborhood using the RIVM heat-stress model, if a player adds a tree, we focus on recalculating the heat stress changes specifically around the tree’s location, rather than reassessing the entire neighborhood.

This method significantly accelerates the update process, optimizing performance by avoiding unnecessary full-scale recalculations.

Pushing the computations to the GPU

To push the performance even further, we leverage the GPU for computations by utilizing Godot’s Shaders.

Shaders are specialized programs that run on Graphics Processing Units (GPUs). While they were originally designed for shading 3D scenes, their capabilities have expanded significantly. In our application, shaders are employed to handle the model computations. These shaders are written in a language derived from GLSL (OpenGL Shading Language) within the Godot engine.

Conclusion

By integrating a suite of innovative techniques, we successfully addressed the performance challenges in the Urbo3D Climate Game, enhancing the overall gaming experience for our discerning teenage audience. The strategies—breaking abstractions, reducing the amount of computations, and leveraging GPU computations—collectively contributed to a more responsive and engaging interface, crucial for both educational and professional applications.

Despite these advancements, achieving optimal performance required us to deviate significantly from the original triangle model. This deviation involved tightly coupling data, computational models, and visualization into a more cohesive system. While this approach provided the necessary performance boost, it also highlighted the limitations of the triangle model in scenarios demanding real-time interaction and feedback.

This experience underscores the importance of flexibility in digital twin architectures. While the triangle model offers a robust framework for component reuse and modularity, certain use cases may necessitate a more integrated approach to meet specific performance and usability requirements. Moving forward, a hybrid model that combines the strengths of both abstraction and integration could offer a balanced solution, accommodating the diverse needs of digital twin applications.